Research Truth and BS: How to Speak Science

In Chapter 3, I told you about my rapid transformation from sick, overweight, and sluggish patient to healthy, lean, energetic triathlete. Had I been an accountant, a bricklayer, a rock musician, that would have been the end of the story. But I was a doctor, a surgeon—and a weight-loss surgeon at that. My old proteinaholic views on nutrition were public record, enshrined in Chapter 12 of my 2008 book, The Expert’s Guide to Weight-Loss Surgery. Just to rub my nose in it, here’s a sample passage advising patients what to eat once they begin recovering from the surgery:

“Strained cream soups and yogurt-based shakes and smoothies are good choices. A little cream of wheat with protein powder added or grits thinned with skim milk—even tuna and chicken salad that have been blended until there are no distinct pieces remaining—are also good options. Sugar-free pudding and gelatins are okay, too; add protein powder for added nutrition. Well-mashed scrambled eggs or egg substitute and puréed low-fat cottage cheese are also good protein sources.”

Ugh.

A book is one thing, while television is a whole other level of exposure. In 2006, an old college buddy and I got together and were chatting about our careers. He, it turned out, had gotten into television. When he heard about my career in bariatric surgery, his Nielsen rating detectors starting going off. “That would make a great reality show,” he mused.

The following year, Big Medicine debuted on TLC. Here’s the network’s description of the show: “The father and son team of Drs. Robert and Garth Davis perform innovative bariatric surgeries at the Methodist Weight Management Center in Houston. The series chronicles the emotional transformations of obese people who have opted to undergo weight-loss surgery, capturing the process before and during the operation, through recovery and post-op care . . .”

Big Medicine ran for two seasons and established me and my dad as “celebrity” weight-loss surgeons. So by the time I discovered that my views on diet and nutrition were all wrong, I had a thriving practice based, in part, on those errors. I began to feel sheepish: How in the world was I going to start telling my patients the exact opposite of what I had been preaching for years?

And feelings of embarrassment aside, my recovery from proteinaholism begged a larger question: How should I be treating patients differently, based on everything I now know?

My transformation, while dramatic and extremely personal, was still anecdotal, a case study of one. Before I was going to change my practice model, I needed to dive into the nitty-gritty of clinical research. Not just the Blue Zone correlations, or the prospective population studies. I had to immerse myself in the totality of evidence, including biochemical laboratory research and controlled clinical trials, in order to treat my patients confidently with my newly adopted plant-based protocols.

And while I was known for my contributions to the weight-loss field, I was acutely aware that the real goal was improved health and freedom from disease, not simply a slim body still in failing health.

I wanted to see if a plant-based diet could treat obesity, sure. But to prescribe it enthusiastically, I wanted to see evidence that it could help diabetic and prediabetic patients. Patients with hypertension and other cardiovascular diseases. Patients facing the terrifying diagnosis of cancer. Patients suffering from any condition likely to shorten their life span.

The more I looked, the more conditions I found that could be prevented, arrested, reversed, and even cured with a protocol that included a plant-based diet. Diseases as varied and disabling as rheumatoid arthritis, ulcerative colitis, diverticulitis, and depression responded well to a plant-based intervention. In study after study, I found support for my decision to treat all my patients the way I had successfully treated myself.

So Parts III and IV of this book, for me, are a bit of redemption: a chance to right my prior book’s mistakes, this time with evidence-based dietary recommendations. These sections will be absolutely full of science. Maybe too much for many people, but if I leave out too much, I will be accused of speculating. My use of plant-based diets for my patients is not an ideologic decision but rather an evidence-based practice.

Before we jump in, though, I want to make sure that I don’t just add to your confusion by contradicting everything you’ve heard before. Prior to surveying the scientific evidence favoring a plant-based diet, let’s look at how to evaluate scientific research. So the next time someone says, “But I heard that cholesterol levels don’t matter,” or “I saw an article that bone broth was really good for me,” you’ll be able to look at the data and decide for yourself if the sources are valid and trustworthy.

A Glut of Information and a Famine of Wisdom

People who know nothing about nutrition often offer the following statement as unassailable truth: “There’s no such thing as a diet that’s right for everyone.” Where does this firm belief in “nutritional relativism” come from? It’s common sense that people with food allergies and sensitivities should avoid foods that trigger them, but the science is clear and overwhelming that there is a fundamental dietary pattern that has been shown to be superior in every population where it’s been studied.

A number of popular and misinformed nutritional “experts” promote a fantasy they call “bio-individuality,” meaning that we’re all different and need to eat based on our body’s own inner wisdom. That’s fine in theory, but in practice it usually means choosing the foods we crave over the ones that can heal us. Imagine telling a cocaine addict to listen to his body. He’d be bent over a mirror with a glass straw up his nose as soon as his current high started to fade.

But nutritional relativism is much bigger than that. It’s largely been created by a tidal wave of conflicting information, much of it intentionally created expressly to sow confusion and doubt. Bloggers and social media outlets jump on any study that appears to support their worldview and create sensational headlines as “click bait” to increase page views and advertising revenue. The layperson, sincerely looking for guidance on how to eat healthy, is easily swept up in the rhetoric. Without the medical community guiding them, too many make decisions based on the writer’s or speaker’s eloquence and charisma, rather than an honest review of the science. In fact, the people actually doing the science are largely absent from social media and the popular press, leaving a vacuum that allows industry-funded charlatans to sell their nonsense.

Without a science-based rudder to steer the correct course, our eating patterns have changed dramatically. Fad diets—many of them plainly crazy and some alarmingly dangerous—are the norm. The diet supplement business is thriving. We spend $80 billion a year on diets and diet supplements, yet we have managed only to diet ourselves fatter. We’ve become obese and ill not just from consuming the wrong foods, but also the wrong information. The goal of this chapter is to help you become a smart consumer of information, so you can protect yourself and your loved ones from bad science and harmful food choices.

My Scientific Credentials

Although medicine didn’t teach me about nutrition, it did make me an expert in experimental design. Med school, internship, and residency, and the process of becoming board certified, all trained me to read, understand, evaluate, and integrate complex scientific studies. After my “popular” introduction to the idea of a plant-based diet through books and websites, I began to devour the scientific literature with the same ravenous appetite I already had for research on bariatric surgery and other relevant medical topics. I started attending professional meetings for medical weight loss and treating patients without surgery. I used my ability to read scientific journals and my access to countless articles to examine the actual science of nutrition in depth.

I keep up with nutritional literature, poring through dozens of journals and analyzing many scientific articles each month to be sure I’m sharing the best and latest information with my patients and readers. And because I have a busy clinical practice, I get to see the results of that information in action. Native American leaders would often apply the following question to any new idea or philosophy: “Does it grow corn?” In other words, does the sparkly new idea or exciting concept actually work in the real world to benefit human beings? Since I see hundreds of patients each week, I quickly get real-world feedback on the truth and usefulness of scientific findings.

My ongoing research, as well as my successful clinical application of the plant-based diet, continue to confirm my initial conclusion: humans should be eating mostly plants, and limiting or eliminating animal products.

Once I saw the weight of evidence and applied it to help my patients lose weight and get healthier, I had to confront the question: Why is there still such heated debate on this issue? Our current understanding of nutrition reminds me of the 1946 R.J. Reynolds advertisement, “More doctors smoke Camels than any other cigarettes.” We’ve known that cigarette smoking is a health disaster for at least the past fifty years. No present-day doctor would dare recommend smoking, and no citizen would believe that doctor if he or she did.

When it comes to nutrition, the evidence is even clearer than with nicotine. And the stakes are higher: far more people will suffer and perish from bad food than ever did from cigarettes. So I had to ask: What’s wrong with how nutritional science is conducted, reported, and understood in our society? And given that truths are generally drowned out by half-truths and downright falsehoods, how can ordinary citizens figure out what’s right and wrong?

The answers to these questions are of far more than theoretical value. When you can see behind the curtain, you’ll know how to protect yourself from “information-borne illnesses.” The goal of this chapter is to teach you how to “speak science.” Specifically, I’ll show you how to evaluate the research studies you hear about on TV and read about in blogs, magazines, newspapers, and on your Facebook feed. You don’t need to become an “expert”; a little interest and effort can keep you from becoming a sucker for bad ideas.

Key Issues

Little Dots and Big Pictures

The single biggest problem with science and science reporting is reductionism, a mind-set that obsesses over tiny details at the expense of the big picture. Have you ever zoomed in on a digital photograph? When you make the picture big enough, the overall image is no longer apparent; instead, you see individual dots, or pixels, each with a specific color. Even if the scene depicted is a blue sky, not every pixel will be blue. Some will be black, others white, still others red or brown or dark green or orange. The effect of the blending and melding of all the individual pixels generates the complete image.

I want you to hold on to that metaphor as we look at scientific research. Individual studies are the dots; the full breadth of research is the whole picture. Just as there are orange pixels contributing to the blue sky, there are studies whose findings appear to contradict the preponderance of evidence. Can you imagine someone insisting that, based on his discovery of an orange pixel, the entire sky in this photograph must therefore be orange? All it takes to disprove this absurd suggestion is to pan back out and view the big picture.

But in science, views of the big picture (what we call the “preponderance of evidence”) are uncommon, and not greatly valued. It is very difficult to actually study the big picture. Instead, scientists tend to focus on a few, easily controlled variables. Most academics live in a “publish or perish” environment, where their jobs depend on them being able to get studies into the journals, so science tends to gravitate to the study of these easily controlled variables.

Studies differ in how much of the whole picture they can show. A decades-long population study, rigorously carried out, tells us much more than a lab study conducted over four days. And there’s a massive trade-off, generally speaking, between how much a study can teach us, and how much it costs to run. All things equal, the shorter the study, the less it costs. So science tends to favor short and sweet studies that produce isolated pixels of data over long, comprehensive studies that can give us entire swaths of landscape. To extend the metaphor, a single snapshot of the sky gives us much less valuable information than a time-lapse video taken over an entire season.

The problem is not the small studies that produce the pixels. Our sky photo needs the orange pixels to give us a vivid picture of the blue sky. The problem, rather, is that we—scientists, journalists, policymakers, and the public alike—don’t realize that there is a big picture. When the New York Times reports on the discovery of a new orange pixel, we need to see it in context of the big picture. Our society worships pixels and doesn’t believe in photographs. Therefore we see a story about an orange pixel and start wondering what color the sky is.

Once we’ve lost our ability to tell the difference between reality and fantasy, all sorts of nefarious forces can sneak into our minds and public discourse to profit from that confusion. The point being: never take one isolated study as “proof”. If you want to really research the science then you need to cast a wide net. It is vital to look at the big-picture studies, as well as the smaller studies looking at individual variables. You then should be able to put this all together. For instance, let’s say there is a study that shows drinking milk increases a hormone that may increase risk of prostate cancer. That doesn’t mean milk causes prostate cancer. But if there are several studies that confirm this change, and there is a controlled trial showing that men who drink milk have higher PSA, and there are long-term epidemiologic studies in several countries that show men who consume dairy have higher rates of prostate cancer, then you now have developed a much fuller picture.

Industry Influence

The food industry, one of the largest in the country, has a vested interest in keeping the public confused. Their marketing and PR departments have studied at the feet of the tobacco companies, whose private motto for decades was “Our product is doubt” (Freudenberg, 2014). If they sell a food product found to be harmful, they simply refer to the odd assortment of obscure articles that actually show the food to have some benefit, or even a study that shows one ingredient of their product to be good for you. Many scientists are on the payroll of food companies, hired to prostitute their professional credibility by publishing misleading articles and promoting bad science at industry meetings. The dairy industry actually held a meeting where they developed a goal to “neutralize” the science that dairy may be bad for the public.

Is meat good or bad for you? Who knows, right? We’ve all seen evidence for both sides. And if we don’t really know, here’s where we end up: we believe what we want to believe. So when the TV commercial featuring a juicy steak sizzling in a grill pan, we focus on what we’ve heard about life-giving protein and ignore the little voices warning of heart disease from saturated fat and cholesterol and the cancer-causing heterocyclic amines. And off we go to the steakhouse.

Because the food industry can afford a much bigger megaphone than the honest nutritional scientists toiling away in their offices and labs, we hear about many more orange pixels than there actually are in the big picture. After a while, it starts looking like the entire sky is tinged with orange, to our surprise and delight.

This probably isn’t the first time you’ve heard about wealthy and powerful interests hiding or distorting facts to maintain their wealth and power. What we need to do to break their grip is examine, in some detail, exactly how they succeed in promoting a false and self-serving “orange pixel” agenda at the expense of true blue sky.

Funding Determines Outcome

While certain scientists have traded all their professional credibility for industry money, others believe themselves to be impartial and independent. Unfortunately, virtue doesn’t keep the lights on in the lab or pay the stipends of graduate assistants. So even the most noble seekers of truth have to raise the money for their research somewhere. And evidence shows that researchers who accept corporate money find in favor of their benefactors’ interests a curiously high percentage of the time. I know many scientists who insist that industry funding is essential in order to conduct studies, and I do think they believe it will not influence results.

However, there have been many studies over the years showing that big pharmaceutical companies can influence science in their favor. Recently, it has been found that big agribusiness is doing the same thing. A review was performed of 206 recent studies on the health effects of milk, juice, and soda. Of those studies, 111 declared financial ties to industry, receiving part or all of the funding for the study from the manufacturer of the beverage in question. (Keep in mind that these are only the known ties. Many other financial arrangements can be hidden.) The 111 industry-funded studies showed zero unfavorable findings. That’s right, not a single one found evidence that the beverages in question were harmful. Let that sink in for a moment: no study that received funding showed anything bad about consuming soda, juice, or milk. Can you imagine anything more ridiculous? The 95 unbiased articles, on the other hand, found evidence of harm 37 percent of the time. This is a very significant difference, demonstrating clearly that science can be bought (Lesser, Ebbeling, et al. 2007).

Biased science can sometimes appear in highly regarded professional publications like the New England Journal of Medicine, but the easiest route to publication comes via what I think of as “junk journals.” The equivalent of diploma mills, these journals take advantage of academics’ career pressure to “publish or perish” by accepting and disseminating any article, no matter how bad. The hallmark of a good journal is a process known as “peer review,” in which experts in the field evaluate the study for quality and refuse publication to those studies that don’t meet rigorous research standards. Peer review by no means guarantees a good article, but it is one of the best filters we have.

The junk journal industry produces a huge volume of scientific research, much of it poorly executed, that allows authors to add the reference to their resume. There are many journals that now exist to accommodate the seemingly infinite number of studies that would not survive true peer review.

A journalist recently wanted to shed light on this issue, so he conducted a very shoddy experiment with just a few people that he fed chocolate to daily. He looked at multiple variables over a short period, knowing that if you have a few people followed for a short period you will find some variable that, simply by chance, will appear as a significant variable. In this situation he found that the group that ate chocolate daily, again just by chance, lost more weight over the short period of his purposely flawed study. He then created a false name complete with Ph.D. credentials and paid a junk journal to publish this ridiculous article. Low and behold, seventeen different media outlets ran stories saying chocolate can reduce weight.

The Government/Industry Connection

You might think that the government wouldn’t lie to us, mislead us, or hide information that could be crucial to our health. After all, the government is by the people and for the people, and therefore not susceptible to false marketing claims. Nothing could be further from the truth.

It is fascinating to watch the different food lobby groups testify before Congress whenever they are voting on a bill that pertains to how we should eat. At the United States Department of Agriculture (USDA) hearings on the Food Pyramid and MyPlate (the public nutrition education campaign that gets taught in schools and has many policy implications), the dairy, meat, egg and soda lobbyists were all present to protect their special interest. That interest being, of course, their bottom line rather than your health.

Surely the USDA listens to these industry shills with a grain of salt. After all, there’s so much objective science that undermines their claims. Unfortunately, the USDA is required by law to be sympathetic to their positions. The USDA is charged to not only make sure food is safe and good for you, but to also make sure that the businesses that produce food are profitable. Can you see how the USDA can find itself in conflict? Worse yet, when conflict does arise between what is good for us and what is profitable, guess who wins? Profit, just about every time.

The Death of Expertise

To recap: a certain percentage of scientific research has been hijacked by a commercial agenda seeking to convince us that harmful foods are health-promoting. And a slew of low-quality journals have come into existence to provide an outlet for unsupervised junk science. When debates occur in front of government officials, the truth is usually sacrificed to the profit motive. Given this environment of more misleading information than we can possibly keep track of and sort out for ourselves, a couple of influential groups have stepped into the breach to complete the catastrophe: journalists and health bloggers.

These folks rely on a website called PubMed, a free public search engine that indexes all the articles tracked by MEDLINE (Medical Literature Analysis and Retrieval System Online), a database maintained by the U.S. National Institutes of Health. On the surface, this database seems like a great thing—and it can be, if you use it right. Unfortunately, the full articles are not available via PubMed—just the abstracts (brief summaries of just a few paragraphs). If you want to see the full article, you often have to pay for the privilege. Furthermore, few reporters or bloggers will take the time to read the full article, complete with confusing scientific jargon and complex tables and charts. Even if they did, most are not equipped to understand the intricacies that go into judging whether a study was properly executed. Instead, the last line of the summary is sensationalized and converted into the front page of the morning news, regardless of whether that study has any scientific value or not.

Science writer Julia Belluz notes that reporters and scientists approach research quite differently. Reporters want to know “what’s new,” while scientists are trained not to trust brand-new results that contradict established findings. The result? Small, badly designed studies with anomalous findings are reported as medical breakthroughs, instead of outlier data that needs to be replicated with rigor before informing public discussion and policy. Belluz points to a 2003 review of how so-called highly promising basic research fared as it was translated into clinical experiments and implementation. Of 101 articles published between 1979 and 1983 that claimed a “novel therapeutic or preventive” technology, only five had been licensed for clinical use by 2002, with just a single technology in widespread use. If health journalists in the early 1980s were as trigger-happy as many are today, the public would have had their hopes raised for 96 miraculous new treatments and cures that turned out to be total duds.

Increasingly, journalists are becoming the new health gurus in our society. Those who can write and speak with charm and conviction hold sway, even getting invited to keynote supposedly rigorous professional meetings where they are treated like celebrities. And unlike true scientists, who are bound by professional ethics to always question their current theories and actively seek evidence to refute or refine them, the health journalists tend to jump on their horses and ride unwaveringly into the sunset of whatever fad they’ve taken a fancy to.

Example: The Terrible “Meat Eaters Are Healthier Than Vegetarians” Study

In a world where health journalists lack perspective, expertise, and time, and where many have their own axes to grind, any bad study can become grist for the public confusion mill. In February 2014, researchers from the Medical University of Graz in Austria published a study in an online journal (Burkert, Muckenhuber, et al. 2014) that bore shocking news: vegetarians are significantly less healthy than meat eaters. This “man bites dog” story was bound to attract media attention: it looked authoritative, including lots of complicated terms and numbers and charts, it told a counterintuitive story, and it made millions of meat eaters feel better about their dietary choices.

Sure enough, the media did pick up on the story. On April 1, 2014 (a date chosen without any apparent irony), Benjamin Fearnow, a reporter for CBS News Atlanta, wrote an article headlined “Study: Vegetarians Less Healthy, Lower Quality of Life Than Meat-Eaters.” The article repeated the published findings:

“Vegetarians were twice as likely to have allergies, a 50 percent increase in heart attacks and a 50 percent increase in incidences of cancer. . . . Vegetarians reported higher levels of impairment from disorders, chronic diseases, and suffer significantly more often from anxiety/depression.”

Similar articles reporting on the Austrian study began appearing in print and online (a Google search in March 2015 returned over six thousand results for the phrase “Austrian study vegetarians less healthy lower quality of life”).

Knowing what I know about thousands of articles that reach the opposite conclusion, my BS alarm starting ringing. Despite this, I didn’t immediately dismiss the original study. Perhaps there was something new and valuable that I could learn from it. So instead of relying on the article abstract or other journalists’ work, I found and downloaded the actual article from PLOS ONE journal. You can do the same; unlike most published research, the article is under an open-access license. Here’s an easy web link that will get you there: j.mp/bad-veg-study.

The study looked at over 15,000 people. Since the point of the study was to compare the relative health of meat eaters and vegetarians, I would expect that it would have included a decent amount of vegetarians. Shockingly, only 0.2 percent of participants were vegetarians. Just 343 vegetarians. With such a low number there was no way to perform an adequately powered statistical analysis, so the authors didn’t try. Instead, they compared the few vegetarians they had with age-matched meat eaters. Some of the vegetarians had no age-matched counterparts, so they were dropped from the study. Now we’re down to 330 vegetarians.

Okay, we’re clearly off to a bad start. But we can salvage the study to some extent. If we take these few vegetarians and compare them with meat eaters and follow both groups for years and see how they do, we’ll certainly learn something of value. Is that what this study did? Of course not.

The researchers conducted one telephone interview with the study participants to assess their health and eating habits. So do we know how long they have been vegetarian? No. Could they have turned vegetarian because they were sick? Of course. Many people facing a diagnosis of heart disease or cancer adopt a vegetarian diet. Despite the fact that the diet may have been used as treatment, it was misidentified as a potential cause. That’s like saying that insulin injections cause diabetes, since we see lots of diabetics taking insulin injections. That’s the problem with a static snapshot study (called a cross-sectional study): not only can’t it distinguish causation from correlation, it’s liable to infer backward causation as we see here. This was a one-day study, not a multiple-year prospective study like other articles I will share later in this section.

Well, at least we should be able to discern exactly what “vegetarian” means, right? We should at least know that these vegetarians are in fact eating vegetables, right? Again, no such luck. The researchers labeled people but never assessed their exact meal plan. There is no mention of how many fruits and veggies they were eating. In fact, the study found that vegetarians were less likely to pursue preventive health measures, which in itself could explain their poor health. Many ethical vegans who avoid meat for moral reasons consume unhealthy diets devoid of fruits and veggies.

Overall, we’re looking at bad science. Had a student turned this in, I would have had to give it an F, regardless of the findings, based just on the poor experimental design. Their conclusion is not worth the paper this would have been printed on, had it actually been printed. Instead, this utter waste of time wound up in an online journal. The methods and findings were not subject to debate at a large scientific conference, where it certainly would have been laughed off the plenary floor.

In the end, this study would have been never looked at again, except that media loves a good controversial study that supports our prejudices. Now, mentions of this article are showing up all over the Internet as if a Nobel Prize winner from Harvard Medical School had just completed a thirty-year prospective study and published the results in the New England Journal of Medicine. Do you still wonder why we’re so confused?

Example: Misleading Paleo Weight-Loss Study

Another study that garnered lots of media attention purportedly “proved” that a Paleo diet can help people lose weight and prevent diabetes. On the surface, the study looks legit. It’s full of medical jargon and features incredibly careful, precise, and well-considered outcome measures. The average layperson would not be able to understand it; therefore, the responsibility for analyzing and critiquing the study falls upon science journalists. Unfortunately, most journalists and bloggers simply reported the conclusions and paid no attention to the ridiculous study design.

The study, published in 2013 in the Journal of Internal Medicine, is titled “A Palaeolithic-Type Diet Causes Strong Tissue-Specific Effects on Ectopic Fat Deposition in Obese Postmenopausal Women.” The researchers, all from prestigious European institutions, fed a Paleo diet (30% protein, 40% fat, and 30% carbohydrate) to 10 obese but otherwise apparently healthy postmenopausal women for five weeks. The women lost an average of 10 pounds and lost some fat from their livers. They also improved on some other measures, including blood pressure, cholesterol, and triglycerides. This suggests, according to the authors, that a Paleo diet can be protective against diabetes, as fatty liver appears to be a precursor to that disease.

Sounds reasonable, right? It’s only when we go a bit deeper do we discover the craziness of the entire study and its design. First, there was no control group. Why is this important? Because the Paleo diet used in the study had an interesting characteristic: the women consumed 25 percent fewer calories on the Paleo diet than on their previous diet.

Here’s a useful piece of scientific jargon for you: Big Deal.

That’s the correct response to this study. A group of women ate 25 percent less and lost weight? Big Deal. Drop 25 percent of calories on any diet and you will lose weight. Lose weight and your cholesterol, blood pressure, and triglycerides will improve. Remember Professor Haub and his 1,800-calorie junk food diet?

A control group that also dropped caloric intake by 25 percent would have achieved the same results, at least. Even had they been eating Twinkies and drinking Coke, they would have seen improvements in those measures just through caloric restriction. The results had nothing to do with the Paleo part of the diet.

Remember the title of the article? It included the phrase “strong tissue-specific effects.” This sounds important, right? It turns out that the calorically restricted diet led to fat loss in the liver, but not in the calf muscles (the other place the researchers measured). The article interprets this fact to mean that the Paleo diet has some sort of specific magical effect on fat in the liver; hence its effectiveness in preventing diabetes.

The truth is, any weight loss will bring about fat loss in the liver. What’s more interesting is that there was no fat loss in the muscles. That’s the fat you want to lose to become healthy. Another fact—probably the most important fact to come out of the study—was this: “Whole-body insulin sensitivity did not change.” In other words, there was absolutely no effect on the mechanism that causes diabetes. This makes perfect sense, given that there was no decrease in intramyocellular (muscle) fat. We will discuss this in the diabetes chapter, but fat in the muscle determines how sensitive you are to insulin, and hence how susceptible you are to developing diabetes. Here, the subjects lost weight and yet they were not able to lose fat from their muscles.

To their slight credit, the researchers did acknowledge the possibility that their entire study was pointless near the end of the discussion section: “This suggests that macronutrient composition is important, although the possibility cannot be excluded that the same result would be obtained with different food choices of identical macronutrient compositions” (italics added).

This is the problem with PubMed. Journalists and bloggers reference articles but don’t actually read the full articles. They rely on misleading abstracts that hide the design flaws and real findings of the study, and they generally lack the time, training, or incentive to uncover and report the truth.

Example: Death by Journalism

As I mentioned earlier, journalists are replacing scientists and clinicians as the public’s go-to source of health advice. For example, a June 2014 Time magazine cover instructed us to eat butter!

There are hundreds, if not thousands, of scientific articles showing that saturated fat from animal sources is hazardous to your health. In fact, Finland has greatly reduced its huge rates of cardiovascular disease by specifically decreasing butter, as well as increasing fruits and veggies (Laatikainen, Critchley, et al. 2005). How can the honorable Time magazine and so many other media outlets get it so wrong?

The Time article was based on two meta-analysis studies. A meta-analysis combines the data from many smaller studies and looks for trends. Ideally, meta-analyses correct for small sample size and chance findings by zooming out and evaluating the preponderance of evidence. Unfortunately, the meta-analyses selected by the Time reporter did not show that butter and other sources of saturated fat are good for us. Instead, they concluded that the relationship between saturated fat and heart disease may not be as strongly correlated as previously thought.

Many scientists have shown that these articles ignore a large body of evidence to the contrary (Pedersen, James, et al. 2011). In fact, they made significant scientific errors (Kromhout, Geleijnse, et al. 2011; Stamler 2010), not to mention the fact that the authors received money from meat and dairy organizations.

Leaving aside these significant problems with those meta-analyses (which really were outrageously biased in the way they cherry-picked the studies to include) and with the studies they looked at (almost all of them performed a very sneaky trick called “overadjustment” to make the link between heart disease and saturated fat appear much smaller than it really is), Time magazine still took breathtaking liberties in concluding that we should eat butter. None of the studies, nor either meta-analysis, claim that fat is good for us, or that we should be eating more of it.

But if you were in the business of selling magazines, the cover proclaiming “Eat Butter” generates a lot more newsstand sales (and future food industry advertising) than “Eat Kale.”

Understand this: while doctors are experts in their chosen fields, they are probably getting their nutrition advice from Time magazine and other mainstream sources. That’s how I was prior to my recovery from proteinaholism. My profession needs to step up and stop believing the third-hand reports from “true believer” bloggers on secondhand articles from ignorant journalists about badly designed and questionably funded research studies.

The Journal of American Medical Association recently published an article about how to actually read and analyze a meta-analysis study. The article’s authors advise, “Clinical decisions should be based on the totality of the best evidence and not the results of individual studies. When clinicians apply the results of a systematic review or meta-analysis to patient care, they should start by evaluating the credibility of the methods . . . the degree of confidence . . . the precision and consistency of the results . . . and the likelihood of reporting bias” (Murad, Montori, et al. 2014).

How to Tell Fact from Fiction

We’re living in an age of information overload, and many people are taking advantage of this situation by promoting ambiguity and confusion around diet and health. As you’ve read this far, you may even entertain the thought that I’m just adding to your confusion. After all, in the next several chapters, I’ll be sharing evidence that may contradict everything you’ve ever heard about the health benefits of protein.

To avoid that problem, I’m going to share my research process with you. I’ll show you in detail how I make decisions about treatment protocols and patient recommendations based on the evidence, in spite of the noise created by irresponsible journalism and shoddy science. While you may not be able to access all the original articles, and you may not have the scientific background to evaluate and interpret every study, at the very least you can begin to ask the questions that can separate fact from fiction, and distinguish real science from profit-driven BS.

Here are my rules for being an educated consumer of health information.

1. Never believe anything you find in a newspaper, magazine, blog, or TV or radio story.

As we’ve seen, these second- and third-hand accounts are often based on sloppy perusals of abstracts rather than nuanced readings of the full studies. They typically suffer from conflicts of interest. And they cherry-pick what’s new and controversial rather than what’s old and established beyond doubt.

That’s not to say you should bury your head in the sand and never read newspapers or blogs, or watch TV news. Rather, use those secondary sources as pointers to the original research. If you can’t access the real study, you’ll have to rely on scientists you trust to give you an accurate interpretation. (Hopefully, I’ve become one of those scientists you trust. If you follow me at Facebook.com/drgarth you can read my ongoing critiques of nutrition and health studies.)

2. Never trust a single source in isolation.

This goes back to the “orange pixel in the blue sky image” problem I discussed at the beginning of this chapter. You have to become aware of the breadth of research (in the rest of this section, I share that context with you). One paper cannot prove or disprove anything. No matter the study, I use it as a single data point within a complex algorithm for making medical recommendations to my patients. Don’t fall for the pseudoexperts online who use single studies to prove points while ignoring the preponderance of evidence to the contrary. I’m not saying that every new finding is worthless; rather, be suspicious of outlier data and demand replication of the finding in larger, well-designed studies.

3. Consider the source

Some researchers are more trustworthy than others. When you encounter a research article, look at the authors. What institution are they affiliated with? Where did they get their funding for this study? Many journals require authors to state any potential conflicts of interest; others are not so stringent. Sometimes researchers hide their funding by taking industry money for unrelated research so they don’t have to declare it in any particular article. “Following the money” isn’t foolproof, and unless you’re a detective, you’ll miss a lot, but it’s a necessary step in determining credibility. Remember that funding almost invariably influences the outcome of a study, even if the researchers aren’t consciously altering their design or conclusions.

You can also reference other articles they’ve written by finding their institutional biography online. Believe it or not, money may influence their results less than pride. There are academics who have devoted their entire careers to proving that low-carb diets are the healthiest choice. When you examine their history of published works, you find that pretty much everything is on the same topic. Once they establish “guru” status, it’s mighty hard for them ever to see evidence that disproves their beliefs. I’ve found with many of them, God could descend from heaven and debunk their arguments, and they would still hold fast to their incorrect views.

Again, a history of holding a particular view doesn’t automatically discredit it; rather, it alerts me to the potential of “pride bias.” And please don’t think I’m playing favorites here; the tendency to keep finding the results you expect applies to plant-based researchers as well.

4. Consider the study design.

In medical science, the randomized controlled clinical trial (RCCT) is widely assumed to be the “gold standard” of research. Before I argue with this view, let me explain what it means, working backward:

Trial: an experiment, rather than an observational study. In other words, a trial takes a bunch of people and does something to them, then reports on the result.

Clinical: in a clinical setting, with medical professionals monitoring the progress of the trial and the patient outcomes.

Controlled: including an additional group or groups that gets no treatment, or a variation of the main treatment, to make sure the reported outcome was a result of the specific treatment. For example, the Paleo weight-loss study discussed earlier in this chapter would have benefited from a control group of women who were given a diet identical in calories consumed, but different in macronutrient composition. This would have clarified whether the results were due to the Paleo diet, or simply due to caloric restriction and subsequent weight loss.

Randomized: where participants have an equal and random chance of being assigned to any of the experimental or control groups. Randomization ensures against creating groups that are so different at the start of the trial that any differences in outcome could be due to those initial differences.

If we are studying the effects of a new drug or surgical procedure or screening protocol, then the RCCT makes a great deal of sense. It works best when we want to introduce a single variable and keep everything else constant, to see if that variable makes a difference. But when we use the RCCT to decide the effects of various diets on chronic diseases, the model breaks down. There are too many variables that are important, and too many conditions to look at.

Also, it’s impossible to randomize people to diets for more than a couple of months. People simply don’t adhere to strict diets for the length of time it would take to see real changes in health. RCCTs typically try to overcome this problem by shortening the length of the trial. But there’s a huge and often fatal trade-off: short trials can’t look at clinical outcomes, like death, heart attacks, cancer, and onsets of diabetes. So researchers instead look at isolated lab values of biomarkers that are correlated with disease, may be predictive of disease, but do not necessarily equate to disease.

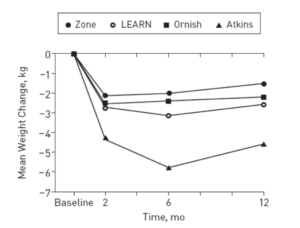

We previously discussed, in Chapter 6, one of the most famous diet RCCTs, which was the 2007 A to Z Trial, and it’s a perfect example of the problem with using RCCT design to study diet. Set up as a one-year trial, the study randomized participants into one of four diets: Atkins, LEARN, Ornish, and Zone. The Atkins dieters were instructed to consume fewer than 20 grams of carbs per day for the first couple of months, and then fewer than 50 grams per day for the remainder of the trail. LEARN dieters included moderate exercise, 50 to 60 percent of calories from carbs, and less than 10 percent from saturated (mostly animal) fat. The Ornish dieters were told to keep their fat intake to 10 percent or less of total calories. The Zone was set up as a 40 percent carb/30 percent protein/30 percent fat diet.

After one year, the Atkins dieters had lost some weight, while the other three groups had not. The Atkins corporation jumped on the findings, and the media dutifully reported that a low-carb diet was crowned weight-loss champion. There were many problems with this study, and most of them derived from the RCCT design; specifically, the randomization. Participants who hadn’t chosen the diets, hadn’t bought into the lifestyles, and lacked any strong commitment to them simply didn’t follow the study guidelines. A true plant-based diet that doesn’t rely on processed junk food should provide at least 40 grams of fiber per day. The Ornish group got an average of just 20 grams. I’m not sure what these so-called vegetarians were eating, but it sure wasn’t vegetables.

Mean Dietary Intake and Energy Expenditure by Diet Group and Time Point*

Oh, and remember that 10 percent fat guideline for the Ornish dieters? They sort of exceeded it . . . by about 300 percent. Before the trial began, the Ornish dieters were getting about 35 percent of their calories from fat. At two months, they had managed to drop that to 21 percent. And that was as compliant as they were ever going to get. At six months, they were up to 28 percent, and by the end of the study they were close to their original diet, obtaining a whopping 29.8 percent of calories from fat.

Yet the media trumpeted the Atkins victory over the “strict” Ornish diet. Of course, there was no Ornish diet under study. Instead, it was a group of people who steadfastly ignored the Ornish guidelines and ate pretty much whatever they wanted. That’s what happens when you try to randomize people into lifestyles—they rebel. It’s another important trade-off to remember: the more “airtight” the experimental design, the less it resembles real life and the less applicable the results.

Oh, and the following graph, taken from the A to Z Trial, shows that the Atkins dieters, like the others, were well on their way to putting the weight back on (a commonly observed outcome in a diet that’s inherently unsustainable).

5. Question the choices of statistical analysis

You might suspect that once the study is complete and the data have been gathered, that’s the end of the scientific process. In fact, the way the data are analyzed and adjusted can introduce higher levels of clarity—and can also turn real findings on their heads. Mark Twain’s phrase about the three main kinds of falsehoods (“lies, damn lies, and statistics”) has never been truer than in nutrition studies.

Ideally, statistical analysis tells us the likelihood that a given result represents a true outcome, rather than random chance. For example, if you flip a coin three times and it comes up tails each time, is that enough evidence to declare the coin “fixed”? How about ten times, all tails? Fifty? Three thousand? Statistics help us put outcomes in perspective, so we don’t over- or underattribute significance to them.

Statistical adjustment also allows researchers to find nuggets of truth that may otherwise be buried or obscured by other data. In the Austrian “vegetarians are less healthy” study, a useful adjustment would have been to examine how long the participants had been vegetarian, or whether their conversion had been motivated by a health scare.

The most misleading adjustments are those that mistakenly account for what are known as “confounding variables.” Most of the studies included in the meta-analyses cited by the Time magazine article on saturated fat committed this error by adjusting for serum cholesterol. The problem is, saturated fat causes heart disease in part by raising cholesterol. By removing people with high cholesterol from the trial, the researchers removed those people most susceptible to the heart-disease-causing effects of saturated fat. This is known as “overadjustment bias,” and it’s the easiest way to torture the data to get the answers you want.

6. Are they doing real science?

Good scientists are humble and cautious. No study is perfect; all involve trade-offs between significance and speed, and accuracy and applicability to the real world. Even the best-designed and most comprehensive study must be replicated by others to ensure its findings are more than a chance blip. Scientists who do good work share their work transparently within the research community, so that others can try to disprove their findings.

Most scientific articles have a discussion section where the authors evaluate and discuss the relevance of the findings. These discussions usually include the authors’ opinions of the shortcomings of the experimental design. It is critical to read and understand this to better understand the significance of the results.

7. Do the findings make sense in the real world?

Before I accept a result and use it to inform patient care, I have to analyze it based on my knowledge of the total body of research, the fundamentals of anatomy and physiology, and my own professional experience. I am in a unique position because I get to see how diet works in real life with real patients over months and years. Many studies are authored by nonphysicians, which is problematic when they come up with hypotheses without appropriate context and reach conclusions without real-life application.

When scientists lack clinical acumen about the subject of their research, they are in danger of completely missing the application of their findings. Recently I attended an Obesity Week scientific meeting where many of the experts in obesity research come to present their latest findings. One Ph.D. presented her work on insulin resistance. She hypothesized that insulin resistance is due to pancreatic beta cell dysfunction caused by high iron in diet. It turns out that when we eat iron it becomes oxidized, and oxidized iron is harmful to the beta cells of the pancreas, which can no longer secrete insulin optimally. Her experimental design was excellent and her animal studies and human studies were convincing. At the end of the presentation an audience member asked the presenter about her own diet. My mouth dropped to my shoes when she replied that she ate a low-carb/high-protein diet. What? A high-protein diet usually means high meat consumption, and high meat consumption means high iron consumption. Here’s a brilliant scientist who is eating a diet that actually contradicts her science!

Even being a physician is no guarantee against this pitfall, as I know only too well. In my last book I recommended a high-protein diet, while in practice I saw people struggling with this diet, looking ill, and gaining weight.

One example where I paid attention to context was my experience with the laparoscopic (lap) band. The lap band, a device that essentially constricts the opening where the esophagus joins the stomach, came out in the mid-2000s. My knowledge of physiology made me suspicious about its efficacy, as prior attempts at placing foreign bodies on the stomach had failed. They would erode or cause motility issues in which the esophagus failed to empty fully or rapidly enough into the stomach. Also, I knew that the gastric bypass worked by altering gut hormones that controlled hunger, while the band did not alter hormone production. So I avoided using the band initially.

However, several surgeons published very promising data regarding the band’s success. I now realize these surgeons built their practice around the band. They had financial incentive as well as academic pride vested in promoting the technology, both of which led to biased research and reporting. But after prolonged exposure to their copious and seemingly well-organized data supporting the lap band, I finally gave in and starting using them in my practice. I performed many lap band surgeries in the mid to late 2000s, always warning the patients about my reservations. As I expected, success was well below what was promised, and the rate and severity of complications was much higher. I stopped doing the bands. Meanwhile, the financially and ego-motivated lap band cheerleaders continued to publish articles with a positive spin. After several years, it was universally apparent that the real-life application failed to resemble what was promised by the science.

Honoring True Expertise

In addition to reviewing the studies, evaluating the research methods, and comparing the findings to my own clinical experience, I like to read and engage in discussion with physicians and scientists with years in the field. Social media fosters a pernicious distrust of true experts, often because those experts dismiss social media amateurs for what they are. An expert has reviewed all relevant studies and has analyzed them. Many times we do not see eye to eye, but I certainly can learn a lot from people with years of experience. In fact, one of the marks of a true scientist is an eagerness to go nose to nose with those who disagree. No Google search could ever provide you with the wisdom you can glean from a scientific meeting. Listening to the experts review the available data with the benefit of their deep and broad experience is a completely different experience than reading blogs and Facebook feeds. At these meetings we discover new research, share expert critiques of that research, and engage in lengthy debates, both in the auditoriums and later at the bars.

I can attest that there are in fact many experts who spend their waking hours exploring vital questions rather than padding their résumés or bank accounts, scientists whose only agenda is truth, wherever it may lead them. One such expert of the last century, Albert Einstein, once said, “Nothing will benefit human health and increase the chances for survival of life on earth as much as the evolution to a vegetarian diet.” Of course, Einstein was merely speculating at the time. Since then, however, science has advanced considerable proof of the importance of, at the very least, increasing fruits and veggies and limiting meat and dairy. While it may appear that scientists are constantly disagreeing, there is in fact a broad consensus that including plants and limiting animals in our diets is the single best thing we can do for our health.

One reason you may not hear these things is that the true scientists simply don’t have time to Tweet snarky, grandiose statements in 140 characters or fewer. A true scientist is always second-guessing and always learning. Let’s look at how some real scientists, untainted by financial conflicts of interest or boastful pride, weigh in on the topic of human nutrition.

Kaiser Permanente is the country’s largest health maintenance organization (HMO). HMOs make money by keeping people healthy, not through treatment. Unlike the traditional health-care models, Kaiser, a nonprofit, collects money from its subscribers and whatever costs for tests or treatments that need to be done comes out of the subscription fee. Therefore, Kaiser is motivated by a strong financial incentive to actually keep people well. After thoroughly reviewing the latest science, Kaiser researchers released recommendations to all their doctors emphasizing the importance of recommending a plant-based diet to their patients. The science was convincing enough for this very large health-care organization, whose 2014 operating budget totaled more than $56 billion.

In 2007, the World Cancer Research Fund teamed up with the American Institute of Cancer Research and the World Health Organization and got experts from around the world to review all research on diet and disease. They produced a huge report called “Food, Nutrition, Physical Activity and the Prevention of Cancer: A Global Perspective.”

From the introduction: “This was a systematic approach to examine all the relevant evidence using predetermined criteria and assemble an international group of experts who, having brought their own knowledge and experience to bear, and having debated their disagreements, arrived at judgments at what all this evidence really means. We reviewed all the relevant research using the most meticulous methods, in order to generate a comprehensive series of recommendations on food and nutrition designed to reduce the risk of cancer.”

So what did this huge, meticulous review of all the science by the world experts conclude? Among other things, reduce meat. There was a clear correlation between meat consumption and many forms of cancer. The report’s experts recommended that diets be mostly of plant origin. While the report looked specifically at cancer, the researchers noted that the same diet promised protection from heart disease as well. And subsequent studies have already shown that following these guidelines does lead to less cancer (Vergnaud, Romaguera, et al. 2013).

The Academy of Nutrition and Dietetics (formerly known as the American Dietetic Association) recently released a statement that vegetarian diets “are healthful, nutritionally adequate, and may provide health benefits in the prevention and treatment of certain diseases. Well-planned vegetarian diets are appropriate for individuals during all stages of the life cycle, including pregnancy, lactation, infancy, childhood, and adolescence, and for athletes”(Craig, Mangels, et al. 2009).

The National Research Council, the American Heart Association, the American Institute for Cancer Research, and many more have emphasized the importance of cutting back on animal protein and eating more fruits and veggies. They may not say, “Go vegan,” because they are trying to be middle of the road. The Harvard School of Public Health has published numerous excellent articles showing that animal protein and fat relates to disease. One of their top researchers was asked why they do not explicitly tell people to become vegetarian when the evidence so clearly supports this recommendation. His response in a Reuters interview was telling: “We can’t tell people to stop eating all meat and dairy products. Well, we could tell all people to be vegetarians. . . . If we were truly basing this on science we would, but it is a bit extreme.”*

Finally, “The Dietary Guidelines of America” released by the U.S. Department of Health and Human Services includes an article titled “Finding Your Way to a Healthier You.” Recommendations included focusing on fruits and veggies and supplementing them with grains and lean proteins, as well as fish, beans, and nuts and seeds. Despite the amount of industry pressure bearing down upon this committee, they still found the science compelling enough to stop recommending meat in favor of predominately plant-based alternatives.

Denialism

An enormous body of research shows that a diet high in animal protein can contribute to disease. Despite this evidence, there will always be naysayers: people who will never allow themselves to be convinced, regardless of the evidence. Highly vocal, they often dominate public conversation and confuse people with their cherry-picked, distorted, and outright false data. A 2009 scientific article explored this phenomenon of “denialism,” and explained the classic methods used to promote head-in-the-sand refusal to see facts (Diethelm and McKee 2009). I’ll summarize its conclusions on scientific denialism so you can better understand the noise you will hear from those who stalwartly oppose all forms of evidence.

1. Denialists believe peer-reviewed journal articles are some sort of conspiracy.

If there is an article that counters their belief, denialists will invent a conspiracy to slander the findings. For example, they contend that medical societies are conspiratorial organizations created with the express purpose of fooling the public. In fact, it is very difficult to get doctors and scientists to agree on anything, much less a global secret conspiracy. Yes, there are individual articles that, as I have mentioned, have been tainted by monetary influence. However, peer review will bring these to light. When you present an article at a scientific conference, you are required to list your financial interests.

2. Denialists like to denigrate experts.

Having been raised to respect experience and expertise, I find this stance extremely odd. When I argue with people online, if I refer to an expert in the field, the comment is often waved off as “appeal to authority.” Why would you not want to hear from an authority who has studied a topic all of his or her life?

There was an interesting event online that will shine more light on this. A young schoolteacher published a blog aimed at discrediting the work of T. Colin Campbell, author of The China Study. The blog gained much publicity in Weston A. Price Foundation circles, and I have since heard many people claim that “the China study has been discredited” and reference this blog for proof. The blogger had basically looked at the raw data that Dr. Campbell collected and saw that one region of China ate lots of wheat and had a high amount of heart disease, which is counter to what was asserted in the book. She performed what is known as a univariate analysis, which simply doesn’t work in research like that done by Dr. Campbell in China that looked at over eight thousand unique variables. She did not look at any other factors that could have caused the relationship. Turns out this region of China ate very few vegetables and lots of meat. An epidemiologist commented on her blog, “it was crude and irresponsible to draw conclusions based on raw, unadjusted, linear, and nondirectional data.” The blogger responded with hostility to criticism from any “authorities,” claiming her right to interpret highly complex scientific data without training or expertise as equal, if not superior, to theirs. Dr. Campbell responded to this hubris in measured fashion:

“I am the first to admit that background and academic credentials are not everything, and many interesting discoveries and contributions have been made by outsiders and newcomers in various fields. On the other hand, background time in the field, and especially peer review, all do give a one-of-a-kind perspective.”

He explained that the biochemical effects he saw in his lab studies established fundamentals and concepts that lead to biologic plausibility that he went into the field to test. The China survey is just one point in a lifetime of study for a man who dedicated his career to investigating the effects of diet on health. It’s simply irresponsible to brush off someone with so much knowledge and experience without trying to gain insight into their research methodology and proficiency with their statistical tools.

3. Denialists cherry-pick the articles that suit their prejudice.

They consider valid only those articles that support their existing point of view, and they ignore or denigrate the rest. As mentioned, true scientists doubt their own views and rigorously and mercilessly test their hypotheses. They also look at the full breadth of available information, actively seeking out opposing interpretations, before judging the validity of a single article (Murad and Montori 2013). Funny enough, should you present a bunch of studies to denialists, they will accuse you of cherry-picking.

4. Denialists create impossible expectations of research.

To a denialist, nothing is true unless the study is a randomized placebo controlled prospective study over many years. Earlier in this chapter, I defined the randomized controlled clinical trial. Denialists want to add one more feature to that trial: placebo control. In drug trials, it’s vital to control for the placebo effect (patients often get better simply because they believe they’re undergoing an effective treatment) so we don’t mistakenly attribute effectiveness to a drug when the real benefit is due to the patients’ beliefs. A common way of doing this is to give one group the active drug, and another group an identical-looking, inert sugar pill. Drug trials are usually “double blinded,” whereby neither study participants nor researchers have any idea who is in the “experimental” group and who is getting an inactive placebo.

In nutritional research that is just not possible. As we saw in the A to Z Trial, when you put individuals on a diet and try to study them long term, there is a very good chance that some will quit the diet. The treatment and the control groups start looking very similar, causing the trial to fail (Willett 2010). And can you imagine trying to blind participants to what they’re eating? You’d have to slap blindfolds on them and feed them through a tube. Obviously, the real-life value of such research would be nil.

Denialists’ favorite type of research to criticize is epidemiologic research. Epidemiology is the study of populations over time to ascertain causes of disease. The denialist will tell you that epidemiologic studies show correlation but not causation and then dismiss the article. My question is, What in the world is wrong with finding correlation? If we couldn’t use correlation, we’d never be able to claim that smoking causes lung cancer, emphysema, or heart disease. Because if an RCCT is the only standard of proof, we’d have to randomly assign a bunch of people to start smoking to see if they would develop those diseases.

Modern-day epidemiology uses all kinds of fancy statistical methods that can identify causal relationships as well as trends. If you are looking at, for example, the relationship between saturated fat and heart disease, you can eliminate other possible causes of heart disease using a statistical tool called “multivariate analysis.” As long as you choose the right variables (like eliminating smoking as a possible contributor to heart disease), you can find correlations solid enough to inform private decisions, clinical practice, and public policy.

Modern-day statistical analysis is very powerful. The studies may show correlation, not causation, but if there is a significant correlation in a modern epidemiologic study, you better believe that where there is smoke there is fire. In fact, it is more common that the rigorous statistical methods actually erase correlations that actually exist than find false correlations (Jacobs, Anderson, et al. 1979). If a well-done, modern, peer-reviewed epidemiologic study shows a correlation, you should definitely take note.

As Dr. Walter Willett puts it, “Large nutritional epidemiology studies, with long-term follow-up to assess major clinical end points, coupled with advances in basic science and clinical trials, have led to important improvements in our understanding of nutrition and the primary prevention of disease” (Willett and Stampfer 2013).

5. Denialists misrepresent data.

They take statements out of context and deliberately misread conclusions. Critics of Gary Taubes, a popular low-carb author, note that he takes much of his “evidence” out of context. In fact, one of the articles he used to show that saturated fat does not cause heart disease actually proved that very same correlation.

Denialists gain influence not from wisdom or authority, but from repetition. Most people know that an unclouded, daytime sky is blue.